Fetching objects from Core Data in a SwiftUI project

When you've added Core Data to your SwiftUI project and you have some data stored in your database, the next hurdle is to somehow fetch that data from your Core Data store and present it to the user.

In this week's post, I will present two different ways that you can use to retrieve data from Core Data and present it in your SwiftUI application. By the end of this post you will be able to:

- Fetch data using the

@FetchRequestproperty wrapper - Expose data to your SwiftUI views with an observable object and an

@Publishedproperty.

Since @FetchRequest is by far the simplest approach, let's look at that first.

Fetching data with the @FetchRequest property wrapper

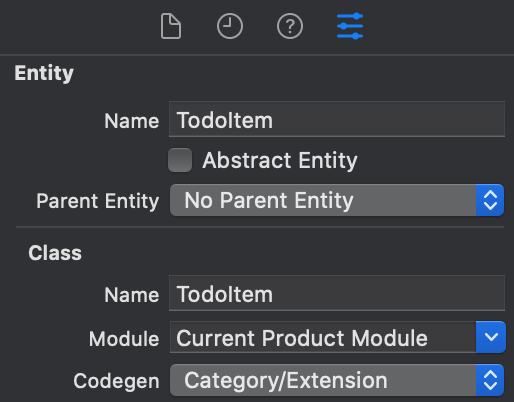

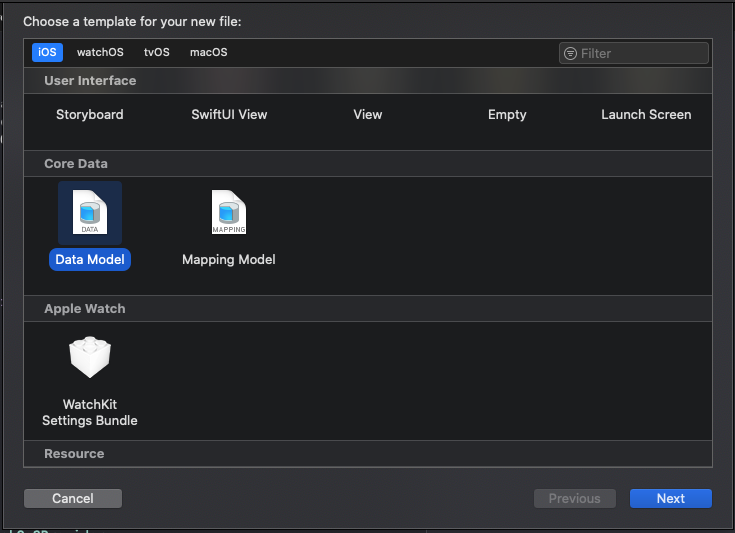

The @FetchRequest property wrapper is arguably the simplest way to fetch data from Core Data in a SwiftUI view. Several flavors of the @FetchRequest property wrapper are available. No matter the flavor that you use, they all require that you inject a managed object context into your view's environment. Without going into too much detail about how to set up your Core Data stack in a SwiftUI app (you can read more about that in this post) or explaining what the environment is in SwiftUI and how it works, the idea is that you assign a managed object context to the \.managedObjectContext keypath on your view's environment.

So for example, you might write something like this in your App struct:

@main

struct MyApplication: App {

// create a property for your persistent container / core data abstraction

var body: some Scene {

WindowGroup {

MainView()

.environment(\.managedObjectContext, persistentContainer.viewContext)

}

}

}When you assign the managed object context to the environment of MainView like this, the managed object context is available inside of MainView and it's automatically passed down to all of its child views.

Inside of MainView, you can create a property that's annotated with @FetchRequest to fetch objects using the managed object context that you injected into MainView's environment. Note that not setting a managed object context on the view's environment while using @FetchRequest will result in a crash.

Let's look at a basic example of @FetchRequest usage:

struct MainView: View {

@FetchRequest(

entity: TodoItem.entity(),

sortDescriptors: [NSSortDescriptor(key: "dueDate", ascending: true)]

) var items: FetchedResults<TodoItem>

var body: some View {

List(items) { item in

Text(item.name)

}

}

}The version of the @FetchRequest property wrapper takes two arguments. First, the entity description for the object that you want to fetch. You can get a managed object's entity description using its static entity() method. We also need to pass sort descriptors to make sure our fetched objects are sorted property. If you want to fetch your items without sorting them, you can pass an empty array.

The property that @FetchRequest is applied to has FetchedResults<TodoItem> as its type. Because FetchedResults is a collection type, you can use it in a List the same way that you would use an array.

What's nice about @FetchRequest is that it will automatically refresh your view if any of the fetched objects are updated. This is really nice because it saves you a lot of work in applications where data changes often.

If you're familiar with Core Data you might wonder how you would use an NSPredicate to filter your fetched objects with @FetchRequest. To do this, you can use a different flavor of @FetchRequest:

@FetchRequest(

entity: TodoItem.entity(),

sortDescriptors: [NSSortDescriptor(key: "dueDate", ascending: true)],

predicate: NSPredicate(format: "dueDate < %@", Date.nextWeek() as CVarArg)

) var tasksDueSoon: FetchedResults<TodoItem>This code snippet passes an NSPredicate to the predicate argument of @FetchRequest. Note that it uses a static method nextWeek() that I defined on Date myself.

I'm sure you can imagine that using @FetchRequest with more complex sort descriptors and predicates can get quite wieldy, and you might also want to have a little bit of extra control over your fetch request. For example, you might want to set up relationshipKeyPathsForPrefetching to improve performance if your object has a lot of relationships to other objects.

Note:

You can learn more about relationship prefetching and Core Data performance in this post.

You can set up your own fetch request and pass it to @FetchRequest as follows:

// a convenient extension to set up the fetch request

extension TodoItem {

static var dueSoonFetchRequest: NSFetchRequest<TodoItem> {

let request: NSFetchRequest<TodoItem> = TodoItem.fetchRequest()

request.predicate = NSPredicate(format: "dueDate < %@", Date.nextWeek() as CVarArg)

request.sortDescriptors = [NSSortDescriptor(key: "dueDate", ascending: true)]

return request

}

}

// in your view

@FetchRequest(fetchRequest: TodoItem.dueSoonFetchRequest)

var tasksDueSoon: FetchedResults<TodoItem>I prefer this way of setting up a fetch request because it's more reusable, and it's also a lot cleaner when using @FetchRequest in your views.

While you can fetch data from Core Data with @FetchRequest just fine, I tend to avoid it in my apps. The main reason for this is that I've always tried to separate my Core Data code from the rest of my application as much as possible. This means that my views should be as unaware of Core Data as they can possibly be. Unfortunately, @FetchRequest by its very definition is incompatible with this approach. Views must have access to a managed object context in their environment and the view manages an object that fetches data directly from Core Data.

Luckily, we can use ObservableObject and the @Published property wrapper to create an object that fetches objects from Core Data, exposes them to your view, and updates when needed.

Building a Core Data abstraction for a SwiftUI view

There is more than one way to build an abstraction that fetches data from Core Data and updates your views as needed. In this section, I will show you an approach that should fit common use cases where the only prerequisite is that you have a property to sort your fetched objects on. Usually, this shouldn't be a problem because an unsorted list in Core Data will always come back in an undefined order which, in my experience, is not desirable for most applications.

The simplest way to fetch data using a fetch request while responding to any changes that impact your fetch request's results is to use an NSFetchResultsController. While this object is commonly used in conjunction with table views and collection views, we can also use it to drive a SwiftUI view.

Let's look at some code:

class TodoItemStorage: NSObject, ObservableObject {

@Published var dueSoon: [TodoItem] = []

private let dueSoonController: NSFetchedResultsController<TodoItem>

init(managedObjectContext: NSManagedObjectContext) {

dueSoonController = NSFetchedResultsController(fetchRequest: TodoItem.dueSoonFetchRequest,

managedObjectContext: managedObjectContext,

sectionNameKeyPath: nil, cacheName: nil)

super.init()

dueSoonController.delegate = self

do {

try dueSoonController.performFetch()

dueSoon = dueSoonController.fetchedObjects ?? []

} catch {

print("failed to fetch items!")

}

}

}

extension TodoItemStorage: NSFetchedResultsControllerDelegate {

func controllerDidChangeContent(_ controller: NSFetchedResultsController<NSFetchRequestResult>) {

guard let todoItems = controller.fetchedObjects as? [TodoItem]

else { return }

dueSoon = todoItems

}

}While there's a bunch of code in the snippet above, the contents are fairly straightforward. I created an ObservableObject that has an @Published property called dueSoon. This is the item that a SwiftUI view would use to pull data from.

Note that my TodoItemStorage inherits from NSObject. This is required by the NSFetchedResultsControllerDelegate protocol that I'll talk about in a moment.

In the initializer for TodoItemStorage I create an instance of NSFetchedResultsController. I also assign a delegate to my fetched results controller so we can respond to changes, and I call performFetch to fetch the initial set of data. Next, I assign the fetched results controller's fetched objects to my dueSoon property. The fetchedObject property of a fetched results controller holds all of the managed objects that it retrieved for our fetch request.

The controllerDidChangeContent method in my extension is an NSFetchedResultsControllerDelegate method that's called whenever the fetched results controller changes its content. This method is called whenever the fetched results controller adds, removes, or updates any of the items that it fetched. By assigning the fetched result controller's fetchedObjects to dueSoon again, the @Published property is updated and your SwiftUI view is updated.

Let's see how you would use this TodoItemStorage in an application:

@main

struct MyApplication: App {

let persistenceManager: PersistenceManager

@StateObject var todoItemStorage: TodoItemStorage

init() {

let manager = PersistenceManager()

self.persistenceManager = manager

let managedObjectContext = manager.persistentContainer.viewContext

let storage = TodoItemStorage(managedObjectContext: managedObjectContext)

self._todoItemStorage = StateObject(wrappedValue: storage)

}

var body: some Scene {

WindowGroup {

MainView(todoItemStorage: todoItemStorage)

}

}

}Before we look at what MainView would look like in this example, let's talk about the code in this snippet. I'm using a PersistenceManager object in this example. To learn more about this object and what it does, refer back to an earlier post I wrote about using Core Data in a SwiftUI 2.0 application.

Note that the approach I'm using in this code works for iOS 14 and above. However, the principle of this code applies to iOS 13 too. You would only initialize the TodoItemStorage in your SceneDelegate and pass it to your MainView from there rather than making it an @StateObject on the App struct.

In the init for MyApplication I create my PersistenceManager and extract a managed object context from it. I then create an instance of my TodoItemStorage, and I wrap it in a StateObject. Unfortunately, we can't assign values to an @StateObject directly so we need to use the _ prefixed property and assign it an instance of StateObject.

Lastly, in the body I create MainView and pass it the todoItemStorage.

Let's look at MainView:

struct MainView: View {

@ObservedObject var todoItemStore: TodoItemStorage

var body: some View {

List(todoItemStore.dueSoon) { item in

return Text(item.name)

}

}

}Pretty lean, right? All MainView knows is that it has a reference to an instance of TodoItemStorage which has an @Published property that exposes todo items that are due soon. It doesn't know about Core Data or fetch requests at all. It just knows that whenever TodoItemStorage changes, it should re-render the view. And because TodoItemStorage is built on top of NSFetchedResultsController we can easily update the dueSoon property when needed.

While this approach is going to work fine, it does sacrifice some of the optimizations that you get with NSFetchedResultsController. For example, NSFetchedResultsController frees up memory whenever it can by only keeping a certain number of objects in memory and (re-)fetching objects as needed. The wrapper I created does not have such an optimization and forces the fetched results controller to load all objects into memory, and they are then kept in memory by the @Published property. In my experience this shouldn't pose problems for a lot of applications but it's worth pointing out since it's a big difference with how NSFetchedResultsController works normally.

While the approach in this post might not suit all applications, the general principles are almost universally applicable. If an NSFetchedResultsController doesn't work for your purposes, you could listen to Core Data related notifications in NotificationCenter yourself and perform a new fetch request if needed. This can all be managed from within your storage object and shouldn't require any changes to your view code.

In my opinion, this is one of the powers of hiding Core Data behind a simple storage abstraction.

In Summary

In this week's post, we took a look at fetching objects from Core Data in a SwiftUI application. First, you learned about the built-in @FetchRequest property wrapper and saw several different ways to use it. You also learned that this property wrapper creates a tight coupling between your views and Core Data which, in my opinion, is not great.

After that, you saw an example of a small abstraction that hides Core Data from your views. This abstraction is built on top of NSFetchedResultsContoller which is a very convenient way to fetch data using a fetch request, and receive updates whenever the result of the fetch request changes. You saw that you can update an @Published property whenever the fetched results controller changes its contents. The result of doing this was a view that is blissfully unaware of Core Data and fetch requests.

If you have any questions about this post, or if you have feedback for me, don't hesitate to reach out to me on Twitter.