What is @concurrent in Swift 6.2?

Swift 6.2 is available and it comes with several improvements to Swift Concurrency. One of these features is the @concurrent declaration that we can apply to nonisolated functions. In this post, you will learn a bit more about what @concurrent is, why it was added to the language, and when you should be using @concurrent.

Before we dig into @concurrent itself, I’d like to provide a little bit of context by exploring another Swift 6.2 feature called nonisolated(nonsending) because without that, @concurrent wouldn’t exist at all.

And to make sense of nonisolated(nonsending) we’ll go back to nonisolated functions.

Exploring nonisolated functions

A nonisolated function is a function that’s not isolated to any specific actor. If you’re on Swift 6.1, or you’re using Swift 6.2 with default settings, that means that a nonisolated function will always run on the global executor.

In more practical terms, a nonisolated function would run its work on a background thread.

For example the following function would run away from the main actor at all times:

nonisolated

func decode<T: Decodable>(_ data: Data) async throws -> T {

// ...

}While it’s a convenient way to run code on the global executor, this behavior can be confusing. If we remove the async from that function, it will always run on the callers actor:

nonisolated

func decode<T: Decodable>(_ data: Data) throws -> T {

// ...

}So if we call this version of decode(_:) from the main actor, it will run on the main actor.

Since that difference in behavior can be unexpected and confusing, the Swift team has added nonisolated(nonsending). So let’s see what that does next.

Exploring nonisolated(nonsending) functions

Any function that’s marked as nonisolated(nonsending) will always run on the caller’s executor. This unifies behavior for async and non-async functions and can be applied as follows:

nonisolated(nonsending)

func decode<T: Decodable>(_ data: Data) async throws -> T {

// ...

}Whenever you mark a function like this, it no longer automatically offloads to the global executor. Instead, it will run on the caller’s actor.

This doesn’t just unify behavior for async and non-async functions, it also makes our code less concurrent and easier to reason about.

When we offload work to the global executor, this means that we’re essentially creating new isolation domains. The result of that is that any state that’s passed to or accessed inside of our function is potentially accessed concurrently if we have concurrent calls to that function.

This means that we must make the accessed or passed-in state Sendable, and that can become quite a burden over time. For that reason, making functions nonisolated(nonsending) makes a lot of sense. It runs the function on the caller’s actor (if any) so if we pass state from our call-site into a nonisolated(nonsending) function, that state doesn’t get passed into a new isolation context; we stay in the same context we started out from. This means less concurrency, and less complexity in our code.

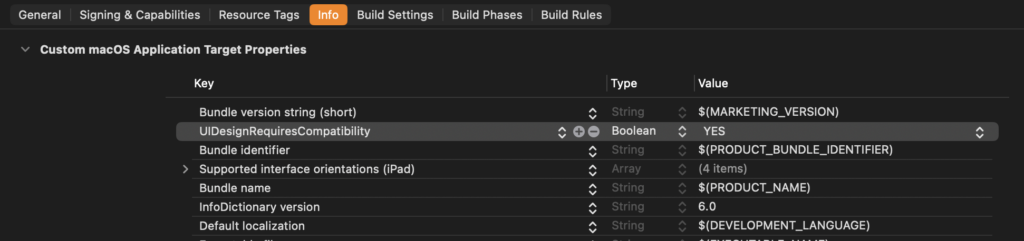

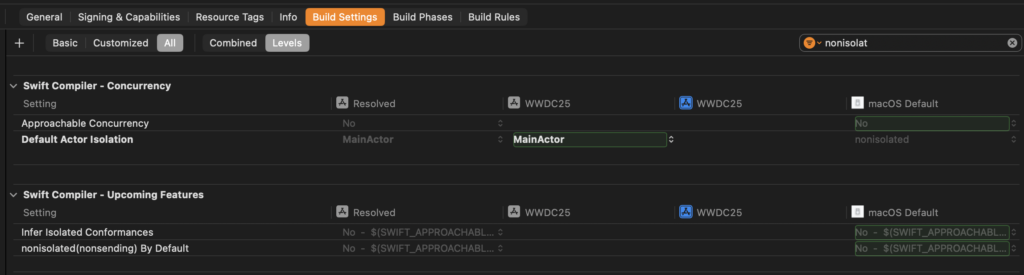

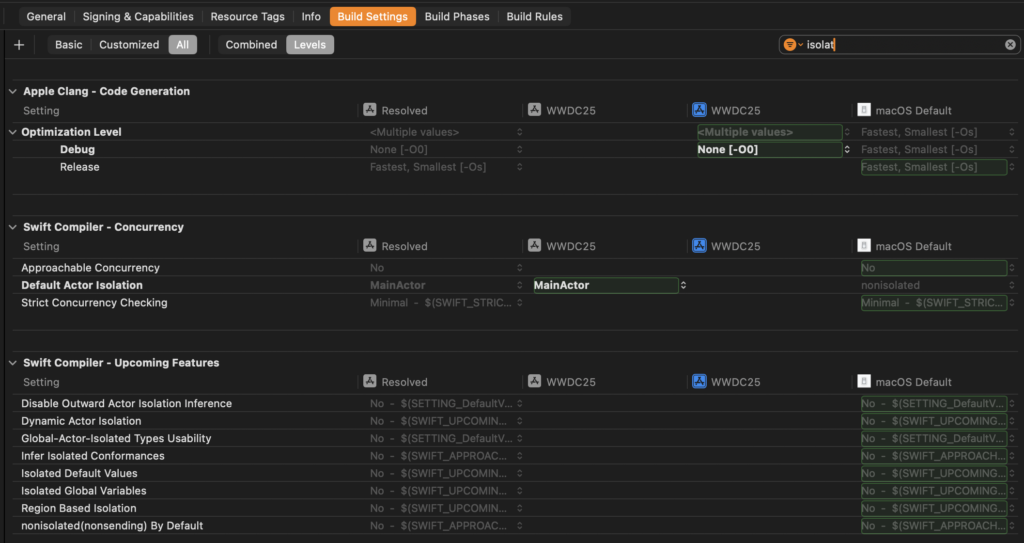

The benefits of nonisolated(nonsending) can really add up which is why you can make it the default for your nonisolated function by opting in to Swift 6.2’s NonIsolatedNonSendingByDefault feature flag.

When your code is nonisolated(nonsending) by default, every function that’s either explicitly or implicitly nonisolated will be considered nonisolated(nonsending). This means that we need a new way to offload work to the global executor.

Enter @concurrent.

Offloading work with @concurrent in Swift 6.2

Now that you know a bit more about nonisolated and nonisolated(nonsending), we can finally understand @concurrent.

Using @concurrent makes most sense when you’re using the NonIsolatedNonSendingByDefault feature flag as well. Without that feature flag, you can continue using nonisolated to achieve the same “offload to the global executor” behavior. That said, marking functions as @concurrent can future proof your code and make your intent explicit.

With @concurrent we can ensure that a nonisolated function runs on the global executor:

@concurrent

func decode<T: Decodable>(_ data: Data) async throws -> T {

// ...

}Marking a function as @concurrent will automatically mark that function as nonisolated so you don’t have to write @concurrent nonisolated. We can apply @concurrent to any function that doesn’t have its isolation explicitly set. For example, you can apply @concurrent to a function that’s defined on a main actor isolated type:

@MainActor

class DataViewModel {

@concurrent

func decode<T: Decodable>(_ data: Data) async throws -> T {

// ...

}

}Or even to a function that’s defined on an actor:

actor DataViewModel {

@concurrent

func decode<T: Decodable>(_ data: Data) async throws -> T {

// ...

}

}You’re not allowed to apply @concurrent to functions that have their isolation defined explicitly. Both examples below are incorrect since the function would have conflicting isolation settings.

@concurrent @MainActor

func decode<T: Decodable>(_ data: Data) async throws -> T {

// ...

}

@concurrent nonisolated(nonsending)

func decode<T: Decodable>(_ data: Data) async throws -> T {

// ...

}Knowing when to use @concurrent

Using @concurrent is an explicit declaration to offload work to a background thread. Note that doing so introduces a new isolation domain and will require any state involved to be Sendable. That’s not always an easy thing to pull off.

In most apps, you only want to introduce @concurrent when you have a real issue to solve where more concurrency helps you.

An example of a case where @concurrent should not be applied is the following:

class Networking {

func loadData(from url: URL) async throws -> Data {

let (data, response) = try await URLSession.shared.data(from: url)

return data

}

}The loadData function makes a network call that it awaits with the await keyword. That means that while the network call is active, we suspend loadData. This allows the calling actor to perform other work until loadData is resumed and data is available.

So when we call loadData from the main actor, the main actor would be free to handle user input while we wait for the network call to complete.

Now let’s imagine that you’re fetching a large amount of data that you need to decode. You started off using default code for everything:

class Networking {

func getFeed() async throws -> Feed {

let data = try await loadData(from: Feed.endpoint)

let feed: Feed = try await decode(data)

return feed

}

func loadData(from url: URL) async throws -> Data {

let (data, response) = try await URLSession.shared.data(from: url)

return data

}

func decode<T: Decodable>(_ data: Data) async throws -> T {

let decoder = JSONDecoder()

return try decoder.decode(T.self, from: data)

}

}In this example, all of our functions would run on the caller’s actor. For example, the main actor. When we find that decode takes a lot of time because we fetched a whole bunch of data, we can decide that our code would benefit from some concurrency in the decoding department.

To do this, we can mark decode as @concurrent:

class Networking {

// ...

@concurrent

func decode<T: Decodable>(_ data: Data) async throws -> T {

let decoder = JSONDecoder()

return try decoder.decode(T.self, from: data)

}

}All of our other code will continue behaving like it did before by running on the caller’s actor. Only decode will run on the global executor, ensuring we’re not blocking the main actor during our JSON decoding.

We made the smallest unit of work possible @concurrent to avoid introducing loads of concurrency where we don’t need it. Introducing concurrency with @concurrent is not a bad thing but we do want to limit the amount of concurrency in our app. That’s because concurrency comes with a pretty high complexity cost, and less complexity in our code typically means that we write code that’s less buggy, and easier to maintain in the long run.