Changes to location access in iOS 13

If you're working on an app that requires access to a user's location, even when your user has sent your app to the background, you might have noticed that when you ask the user for the appropriate permission, iOS 13 shows a different permissions dialog than you might expect. In iOS 12 and below, when you ask for so-called always permissions, the user can choose to allow this, allow location access only in the foreground or they can decide to now allow location access at all. In iOS 13, the user can choose to allow access once, while in use or not at all. The allow always option is missing from the permissions dialog completely.

In this post, you will learn what changes were made to location access in iOS 13, why there is no more allow always option and lastly you'll learn what allow once means for your app. We have a lot to cover so let's dive right in.

Asking for background location permissions in iOS 13

The basic rules and principles of asking for location permissions in applications haven't changed since iOS 12. So all of the code you wrote to ask a user for location permissions should still work the same in iOS 13 as it did in iOS 12. The major differences in location permissions are user-facing. In particular, Apple has doubled down on security and user-friendliness when it comes to background location access. A user's location is extremely privacy-sensitive data and as a developer, you should treat a user's location with extreme caution. For this reason, Apple decided that accessing a user's location in the background deserves a special, context-sensitive prompt rather than a prompt that presented while your app is in the foreground.

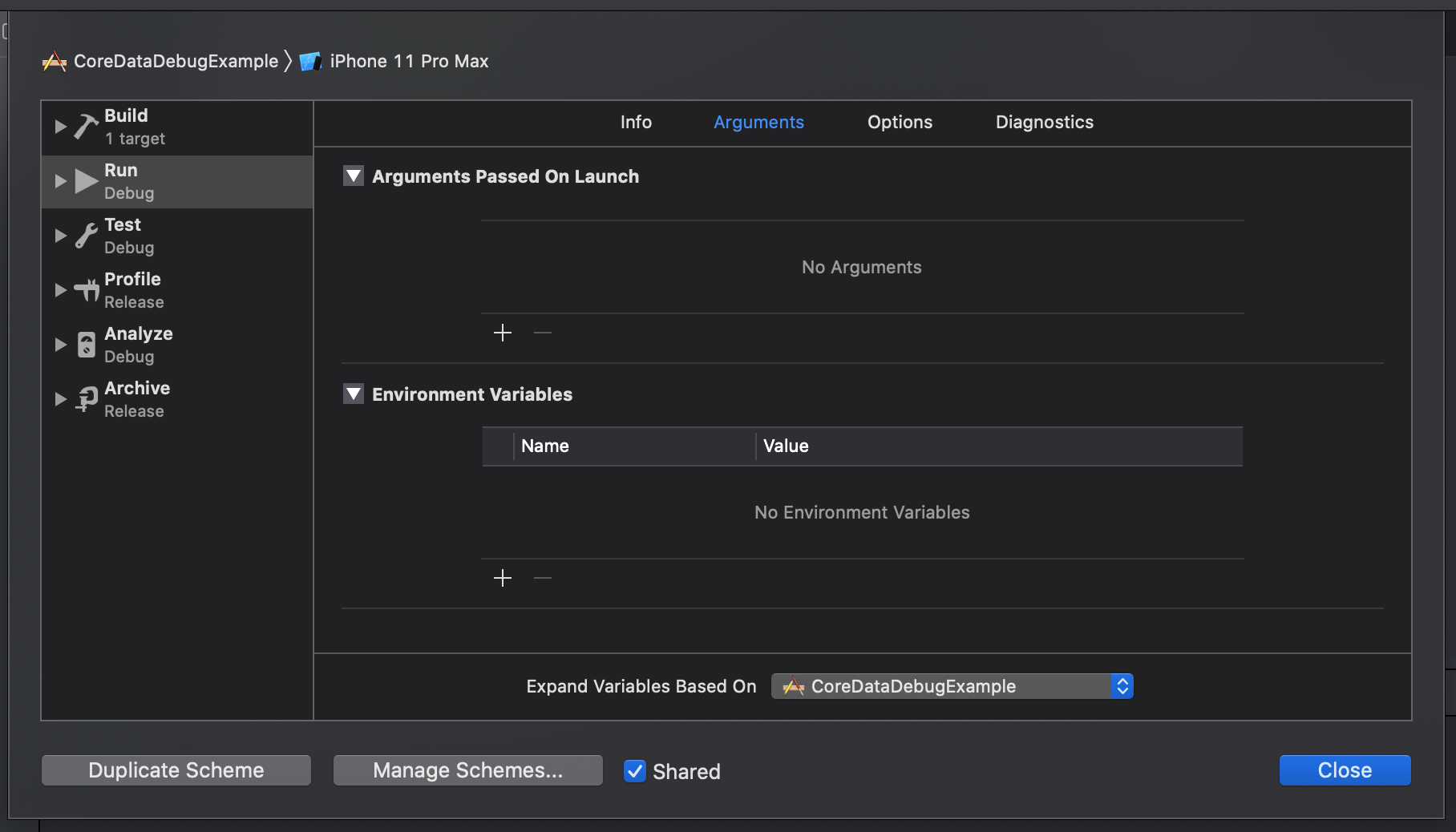

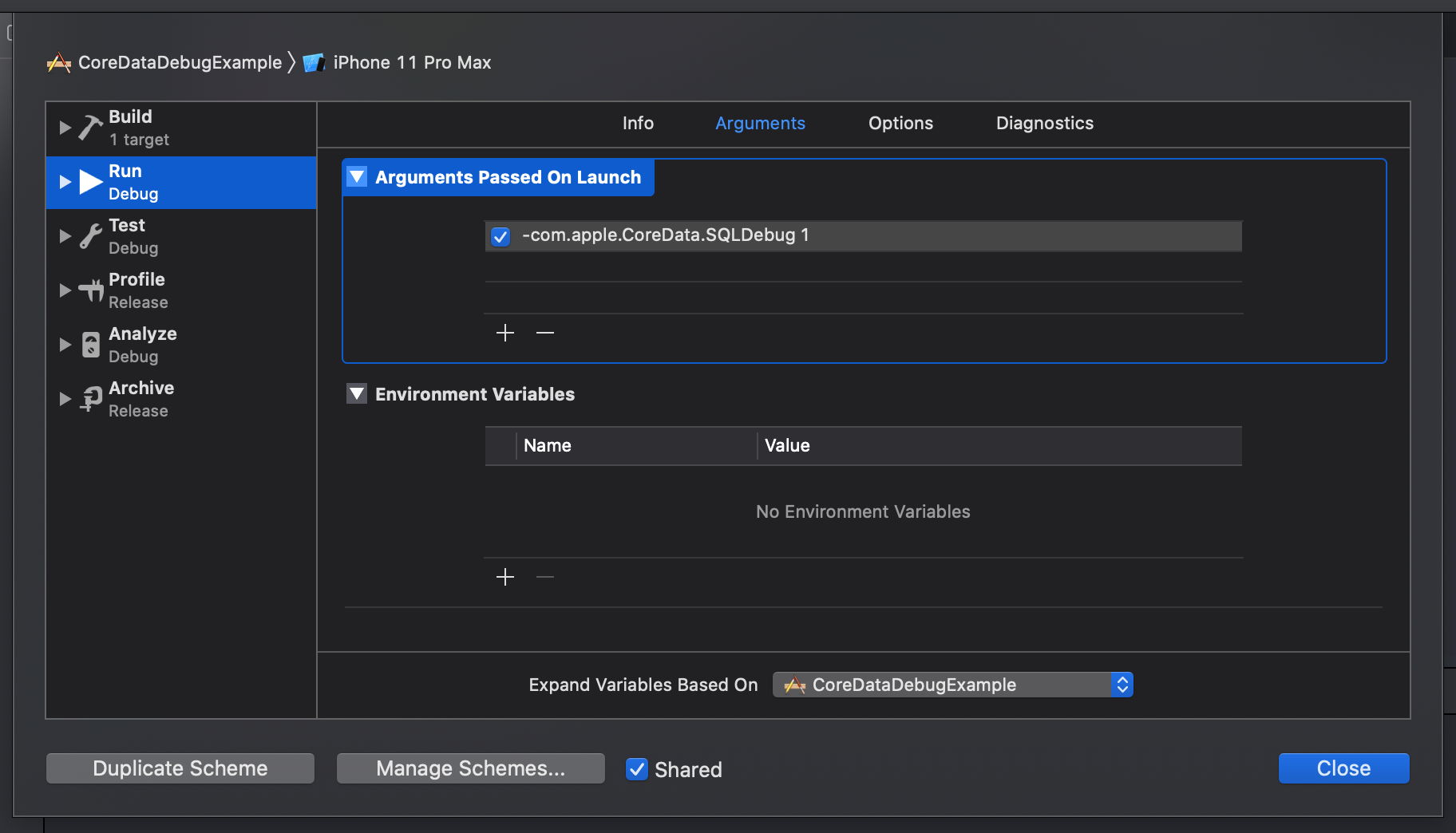

If you want to access a user's location in the background, you can ask them for this permission using the following code:

let locationManager = CLLocationManager()

func askAlwaysPermission() {

locationManager.requestAlwaysAuthorization()

}

This code will cause the location permission dialog to pop up if the current CLAuthorizationStatus is .notDetermined. The user can now choose to deny location access, allow once or to allow access while in use. If the user chooses access while in use, your location manager's delegate will be informed of the choice and the current authorization status will be .authorizedAlways.

But wait. The user chooses when in use! Why are we now able to always access the user's location?

Because Apple is making background location access more user-centered, your app is tricked into thinking it has access to the user's background even if the app is in the background by giving it provisional authorization. You are expected to handle this scenario just like you would normally. Set up your geofencing, start listening for location updates and more. When your app is eventually backgrounded and tries to use the user's location, iOS will wait for an appropriate moment to inform the user that you want to use their location in the background. The user can then choose to either allow this or to deny it.

Note that your app is not aware of this interaction and location events in the background are not delivered to your app until the user has explicitly granted you permission to access their location in the background. This new experience for the user means that you must ensure that your user understands why you need access to their location in the background. If you're building an app that gives a user suggestions for great coffee places near their current location, you probably don't need background location access. All you really need to know is where the user is while using your app so you can give them good suggestions that are nearby, in this example, it's very unlikely that the user will understand why the background permission dialog pops-up and they will deny access.

However, if you're a home automation app that uses geofences to execute a certain home automation when a user enters or leaves a certain area, it makes sense for you to need the always access permission so you can execute a specific automation even if the user isn't actively using your app.

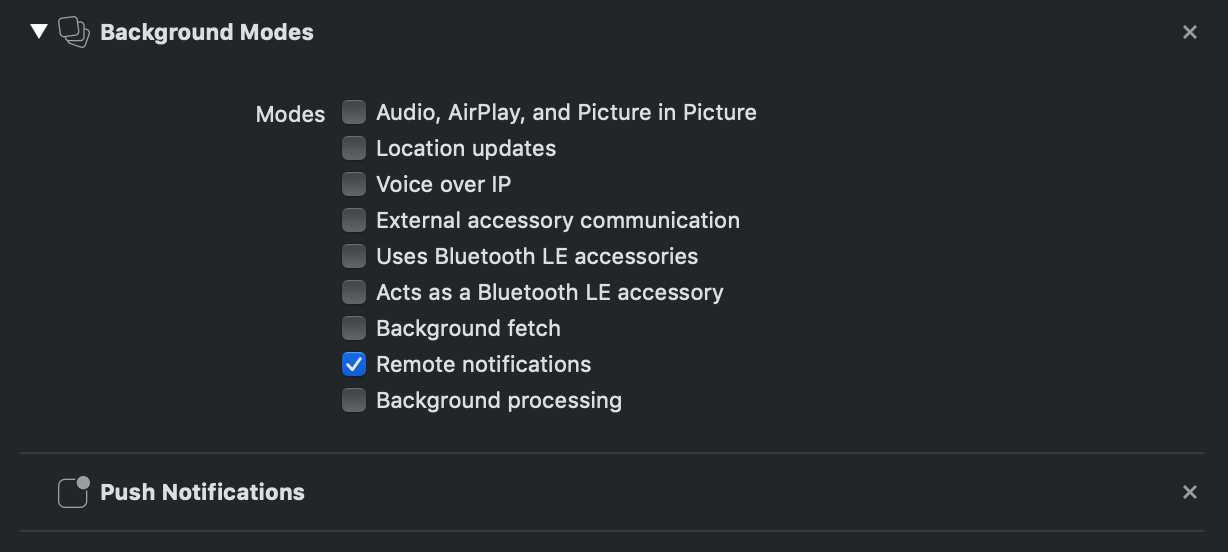

Speaking of geofences and similar APIs that require always authorization in iOS 12, iOS 13 allows you to set up geofences, listen for significant location changes and monitor even if your app only has when in use permission. This allows you to monitor geofences and more as long as your app is active. Usually, your app is active when it's foregrounded but there are exceptions.

If you have an app where you don't really need always authorization but you do want to allow your user to send your app to the background while you access their location, for example, if you're building some kind of scavenger hunt app where a user must enter a geofence that you've set up, you can set the allowsBackgroundLocationUpdates property on your location manager to true and your app will show the blue activity indicator on the user's status bar. While this activity indicator is present, your app remains active and location-related events are delivered to your app.

All in all, you shouldn't have to change much, if anything at all, for the new location permission strategy in iOS 13. Your app's location permissions will change according to the user's preferences just like they always have. The biggest change is in the UI and the fact that the user will not be prompted for always authorization until you actually try to use their location in the background.

Let's look at another change in iOS 13 that enables users to allow your app to access the user's location once.

Understanding what it means when a user allows your app to access their location once

Along with provisional authorization for accessing the user's location in the background, users can now choose to allow your app to access their location once. If a user chooses this option when the location permissions dialog appears, your app will be given the .authorizedWhenInUse authorization status. This means that your app can't detect whether you will always be able to access the user's location when they're using your app, or if you can access their location only for the current session. This, again, is a change that Apple made to improve the user's experience and privacy.

If a user chooses the allow once permission option, your app can access their current location until your app is moved to the background and becomes inactive. If your app becomes active again, you have to ask for location permission again.

It's recommended that you don't ask for permission as soon as the app launches. Instead, think about why you asked for location permission in the first place. The user probably was trying to do something with your app where it made sense to access their location. You should wait for the next moment where it makes sense to access the user's location rather than asking them for permission immediately when your app returns to the foreground.

If you think your app should have access to the user's location even if the app is backgrounded for a while, you can set the location manager's allowsBackgroundLocationUpdates to true and your app's session remains active until the user decides to stop it. This would be appropriate for an app that tracks hikes or runs where a user would expect you to continue actively tracking their location even while their phone is in their pocket.

Similar to provisional authorization, this change shouldn't impact your code too much. If you're already dealing with your user's locations in a careful and considerate way chances are that everything in your will work perfectly fine with the new allow once permission.

In Summary

Apple's efforts to ensure that your user's data is safe and protected are always ongoing. This means that they sometimes make changes to how iOS works that impact our apps in surprising ways and I think that location access in iOS 13 is no exception to this. It can be very surprising when you're developing your app on iOS 13 and things don't work like you're used to.

In this blog post, you learned what changes Apple has made to location permissions, and how they impact your code. You learned that apps now get provisional access to a user's location in the background and that provisional access can be converted to permanent access if you attempt to use a user's location and the background and they allow it. You also learned that your app won't receive any background location events until the user has explicitly allowed this.

In addition to provisional location access, you learned about one-time location access. You saw that your app will think it has the while in use permissions and that those permissions will be gone the next time the user launches your app. This means that you should ensure to ask for location permissions when it makes sense rather than doing this immediately. Poorly timed location permission dialogs are far more likely to result in a negative response from a user than a well thought out experience.

If you have any questions or feedback for about this post or any other post on my blog, make sure to reach out on Twitter.